As a group project, my team created an autonomous robot to play checkers against a human opponent or even itself. This robot features several enhancemnts that I worked heavily on.

- Computer vision code to log board state, and even allow the camera to be moved varying distances from the board or rotated

- Animatronics that work in tandem with a voice clone of our class TA

- A custom built checkers game that features 5 game modes, and integrates with the robot arm movement

- An electromagent that picks up and drops pieces on command

- Voice commands for robot arm movement

Additional Details

Video Preview - Robot Plays Against Itself

Table of Contents

Computer Vision

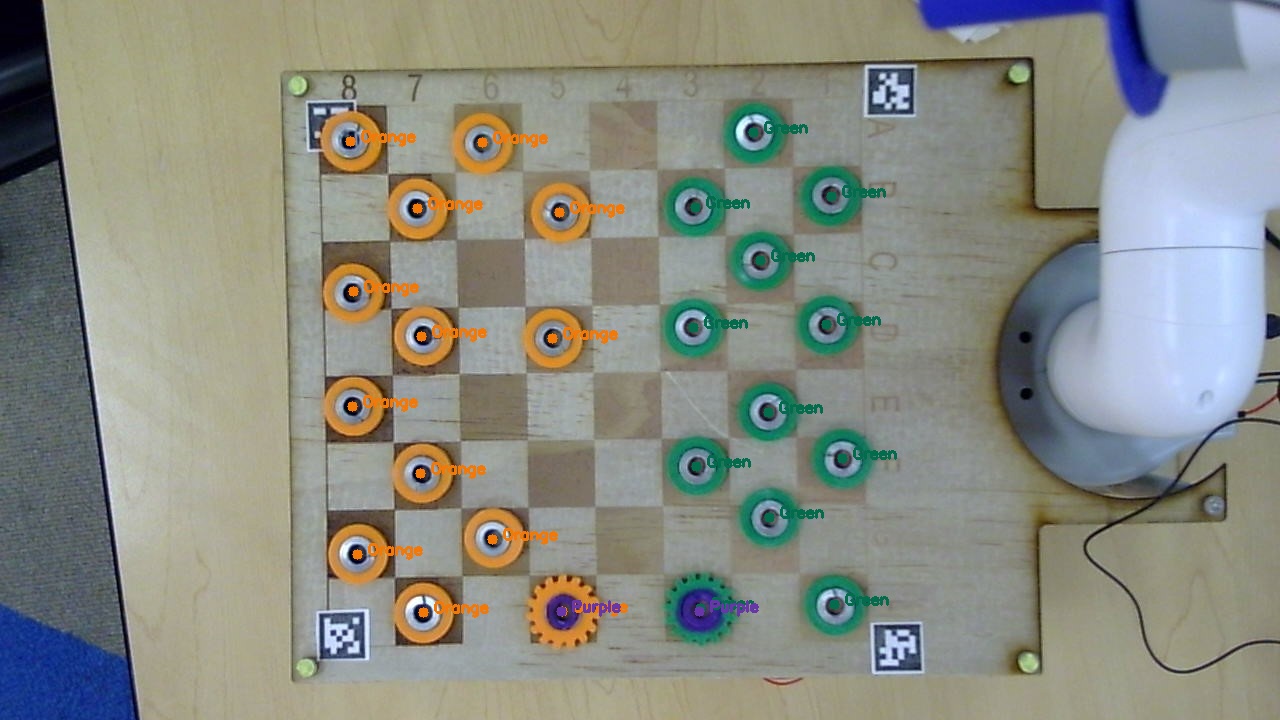

The computer vision integration allows for ease in the robot recognizing human moves. Video capture permits for the game memory to be updated with the current board state layout.

The computer vision module utilizes a live video feed from a camera on a stand that is detached from the board. The decision to detach the camera and stand was due to robot storage space limitations. This detachment presents an additional difficulty of allowing for a variety of camera locations and rotations in relation to the board. The difficulty creates the need for capture of not only piece locations, but also board location and orientation. This was accomplished using 4 April Tags, where only 3 are required and the last is present for redundancy.

An HSV filter with contour detection is combined with the known board orientation to identify piece color, location, and king status.

Animatronics & Voice Clone

STL files were obtained from Will Cogley (animatronic eyes) and Fanki the Printer (model of daughter’s teeth). The printed output from Will’s STL files did not fit properly, due to many issues with tolerances. This required multiple adjustments and reprints for proper function. The teeth mold was not designed for animatronic use, and was modified to allow for the desired movement by my teammate Kaelyn.

The animatronics were controlled using an arduino, and serial communication for mouth movement to sync with the voice clone.

Kent Yamamoto provided the voice for our robot. We recorded samples of Kent speaking complex sentences containing a large variety of English phonemes in various positions within words and sentences. This work captured required parts of speech to effectively mimic natural language. We also recorded Kent speaking fluidly using intuitive speech, so that our cloned voice would have a realistic feel.

With the cloned voice we produced over 50 pre-recorded sayings. Additionally the voice can be accessed through API by calling a Local LLM (Llamafile) or ChatGPT, and ElevenLabs for live and novel interaction.

Checkers Game

Video Preview - Robot vs Human

The game was built using Object Oriented Programming. Classes were created for the piece, board, and game. The piece class contained information about and methods concerning the location, color, king status, etc. The board class contained information about and methods concerning the available movements, current board layout, etc. The game class contained play for the human, random, prioritize jumps, LLM, and minimax actors.

My teammates designed and printed a custom end effector to hold an electromagnet, which in turn can pick up the pieces. I connected the electromagnet to a relay, and wrote code to have it seemlessly operate with the robot arm movements. The end effector makes use of an 8V electromagnet controlled by a 5V wired relay, as 5V was not sufficient to lift the checkers pieces consistently.

While playing checkers, there are four main actions the robot can perform. The robot can move a piece from one square to the next, move a piece off the board after a jump or kinging occurs, add a king to the board, or move out of the way to allow a human turn without obstruction. Motion and path planning is integrated with other parts or the robotic system (electromagnet, voice clone, animatronics, and camera) to allow for proper operation of the entire robot.

As an added bonus, we worked to integrate voice commands to control the robot movement. Given more time, we would have created more consistency with this integration, however we did experience impressive results in quiet environments.